Kubernetes Pod Placement Strategies

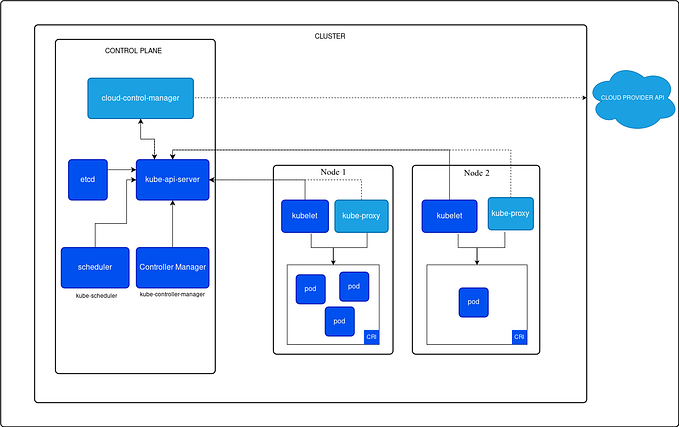

Kubernetes is a platform which enables developers to automate the management of their applications in a containerized deployments.

A Kubernetes cluster can consist of several nodes. By default, Kubernetes places the pods for your applications randomly within these nodes. What if you want to place the pods in your cluster strategically so that a selective set of pods will be placed on specific nodes only. Or to not to schedule some pods in certain nodes in the cluster.

Kubernetes provides the capability to achieve this using a few concepts.

- Taints and tolerations.

- Node selectors

- Node affinity

1. Taints and Tolerations

Generally, the word taint gives the meaning as contaminating. In the Kubernetes world, we taint (or contaminate) nodes in the cluster. By doing this, Kubernetes scheduler would not be able to schedule pods on the tainted nodes.

Now the question is, how can we schedule pods on the tainted nodes. That’s where the toleration comes into play. The word toleration gives the meaning of resistance towards something. Similarly, using toleration, we make pods resistant to the tainted nodes. Hence, the tainted nodes can accept the toleration enabled pods.

But the tolerations will not guarantee that the pod will be only placed in the tainted node. If the other nodes are not tainted, the pod can be placed in untainted nodes as they are free to accept any pods.

Now let’s see how we can enable this in Kubernetes.

1. Taint

You can use the kubectl taint command to taint the nodes.

kubectl taint nodes <node-name> <key>=<value>:<taint-effect>With the above command, the tainted node will be assigned a key-value pair (a taint) along with the tainting effect.

What is taint-effect?

The taint-effect describe what should happen to the pods that do not tolerate the provided taint. There are pre-defined 3 affects as follows.

- NoSchedule : Do not place the pods unless they can tolerate the taint

- PreferNoSchedule : Try to avoid scheduling the pods which cannot tolerate the taint. Not guaranteed.

- NoExecute: By the time taint is enabled on the nodes, the existing pods will be terminated if the pods cannot tolerate the taint.

Example cmd:

kubectl taint nodes node01 layer=frontend:NoExecuteThe node with the name node01 will be applied the taint layer=frontend along with the NoExecute taint effect, meaning that the existing pods in the node01 will be removed it they do not have the toleration for the applied taint.

2. Tolerations

Toleration is applied on the pods to mark that the pod can be placed in the particular tainted node.

To do this, you need to specify the tolerations in the pod-spec as given below. Considering the example used above, the relevant tolerations config would be as below.

tolerations:

- key: "layer"

operator: "Equal"

value: "frontend"

effect: "NoExecute"An important fact to remember is that the toleration will not guarantee that the pod will only be placed in the tainted node. If the other nodes are not tainted, the above pod can be placed in those nodes as well as the un-tainted nodes are free to accept any pods.

2. Node Selector

The node-selector is another strategy where you can configure the pods to be placed in a specific node. For this, you have to perform 2 things.

- First thing is to label the node so that the pods can identify.

- Configure the pod with

nodeSelectorto schedule itself only on the particular nodes by providing the label

But this does not guarantee that the node will only be accepting the specifically labelled pods.

The limitation with node-selector is, that it can only check if the label is equal to the nodeSelector provided in the pod. It cannot check any other conditions. Let me explain it using an example.

Suppose your cluster has 8 nodes with the following labels

2 prod nodes. 2 staging nodes. 2 dev nodes and 2 nodes without any label.

Now you want your pods to be placed in either staging or dev nodes. Or you want to place your pods in any nodes other than the prod nodes. For this type of requirements, you can use node-affinity and anti-affinity which we will discuss later.

1. Labelling nodes

You can easily label your nodes using the kubectl label command.

kubectl label nodes <node-name> <label-key>=<label-value>Example cmd:

kubectl label nodes node01 type=optimzedWith this command, the node01 in the cluster will be assigned the label type: optimized.

2. Add nodeSelector to the pods

The nodeSelector should be added to the pod-spec section as mentioned below.

nodeSelector:

type: optimizedThis will make sure that this pod will only be placed in node with the type: optimized label

3. Node Affinity

Node-affinity allows developers to place the pods in nodes under more complex conditions which cannot be fulfilled using node-selectors. For this, we have to do two steps.

- Labelling the node using kubectl label command.

- Configure the

affinityin the pod definition.

Similar to the nodeSelector, this strategy also does not guarantee that the node will only be accepting the specifically labelled pods.

The nodeAffinity has to be specified in the affinity field in the pod specification. As of now, there are two types of nodeAffinity.

- requiredDuringSchedulingIgnoredDuringExecution

The scheduler will make sure that the pod will be scheduled subject to the provided affinity configurations/rules. If a matching node does not exist, the pod will remain unscheduled. This type does not have an effect for running pods in the event of a label change.

2. preferredDuringSchedulingIgnoredDuringExecution

The scheduler will try to place pod subjected to the configured affinity rules. In case it did not satisfy, the pod will be placed in another available node. This type does not have an effect for running pods in the event of a label change.

However, there is a planned affinity type (which is not yet out as of now), called requiredDuringSchedulingIgnoredDuringExecution which will remove the pods from the node in a case of label change in the node. The scheduling process will be similar to the first affinity type we discussed above.

1. Labelling nodes

You can easily label your nodes using the kubectl label command.

kubectl label nodes <node-name> <label-key>=<label-value>Example cmd:

kubectl label nodes node01 env=prodWith this command, the node01 in the cluster will be assigned the label env: prod.

2. Configure the affinity in the pod definition.

The affinity field should be included in the pod specification as the example given below.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: env

operator: In

values:

- dev

- stagingThis will make sure the pod will be scheduled in nodes which has the label either env: dev OR env: staging. This pod will not be scheduled in any other node.

Let’s look at another example. Imaging we want to schedule a pod in a node which does not have the env label. Then the sample affinity rule would be as follows.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: env

operator: DoesNotExistIf the node does not contain the label with key env, the pods will be scheduled in that node.

Combination of Taints and Tolerations with Node Affinity

While taints and toleration fulfil only a specific requirement, the node affinity fulfil another requirement. Let me rephrase it again.

Taints and tolerations: Make sure the nodes will accept only the pods which can tolerate the nodes. Does not gurantee pod will be only placed in a specific node.

Node affnity: Make sure pods will be placed on nodes subjected to the defined affinity rule on specific nodes. Does not gurantee that the node will not accept other pods scheduling.

Hence to make sure that the nodes will only accept certain pods, and these certain pods will only be scheduled in the specific nodes, you need to make sure to use a combination of these both concepts.

Summary

Key points: Taints and Tolerations

Taint -> Perform on nodes to not to accept the regular pods

Toleration -> Perform on pods to be resistant towards the tainted nodes

Does not gurantee pods will only be places in tainted nodes

Key points: Node selectors

Assign a label to a node using kubectl label command

Configure the pod specification by defining nodeSelector along with the label name of the node, the pod should be scheduled.

Does not support complex operation for scheduling such as OR or NOT condition as it would only check if the nodeSelector label and the node value is equal.

The nodes will accept pods not only specific to the defined label.

Key points: Node Affinity

Similar to node-selector. But support advance operation such as OR confition or NOT Exists conditions.

Does not gurantee pods will only be places in tainted nodes

The nodes will accept pods not only specific to the defined label.

In this article, we discussed the pods placing strategies in Kubernetes in brief and how to enable them. Please do refer the official Kubernetes documentation for more options.

Thank you for reading. I hope you enjoyed it. I will see you in my next article.